Figure-Ground Representation in Deep Neural Networks

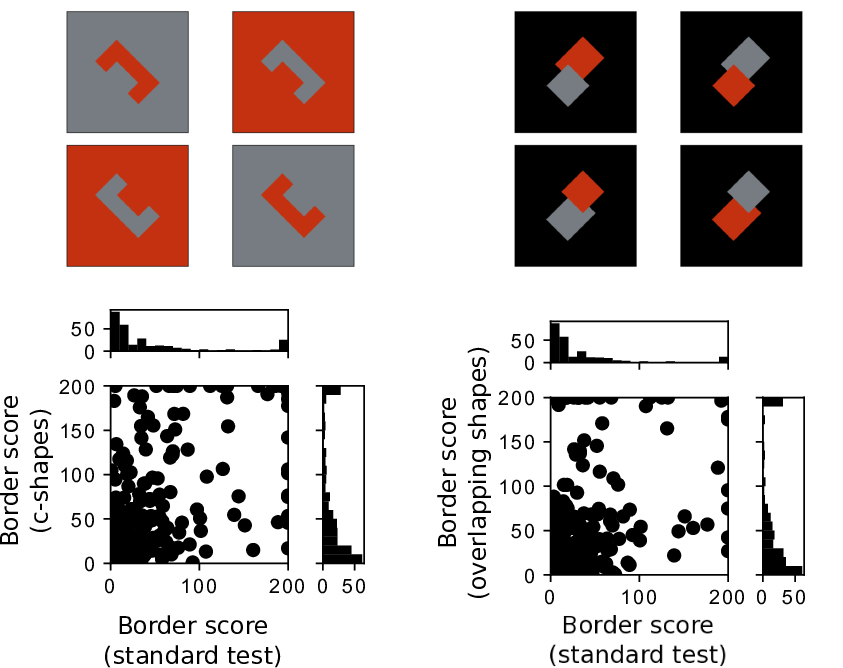

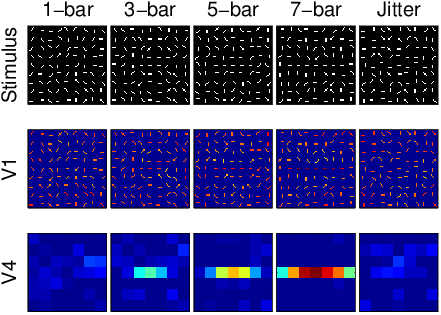

Deep neural networks achieve state-of-the-art performance on many image segmentation tasks. We explored whether deep neural networks use learned representations close to those of biological brains, in particular whether they explicitly represent border ownership selectivity (BOS). We developed a suite of in-silico experiments to test for BOS, similar to experiments that have been used to probe primate BOS. In the networks we tested, we largely found contrast selectivity, with border ownership selectivity appearing only in higher layers.

| Code | Paper |

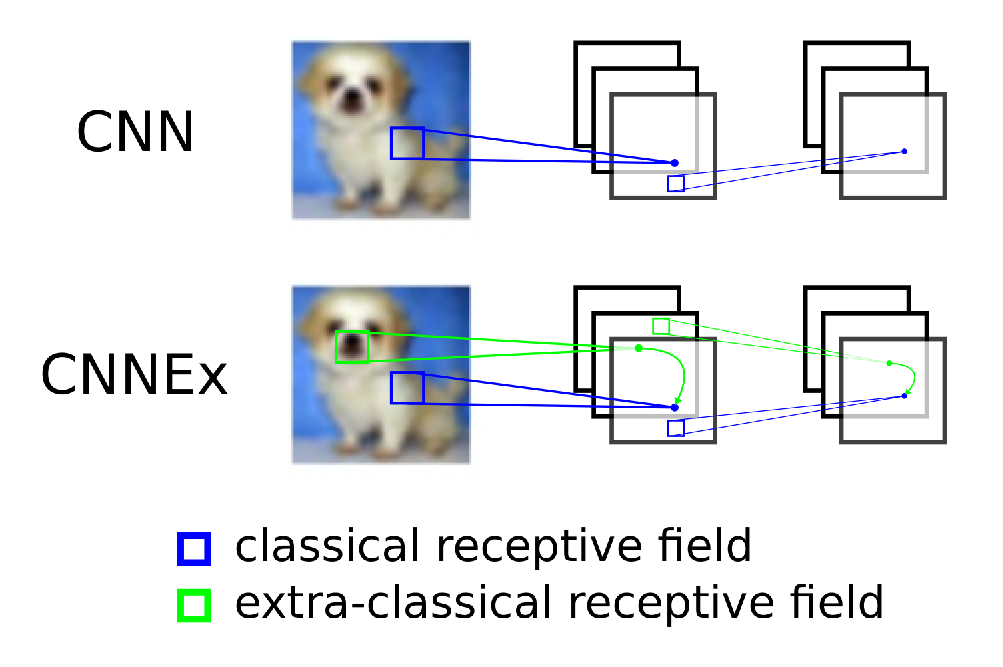

Convolutional Neural Networks with Extra-classical Receptive Fields

Convolutional neural networks (CNNs) largely rely on feedforward connections, ignoring the influence of recurrent connections. They also focus on supervised rather than unsupervised learning. To address these issues, we combine traditional supervised learning via backpropagation with a specialized unsupervised learning rule to learn lateral connections between neurons within a convolutional neural network. These connections optimally integrate information from the surround, generating extra-classical receptive fields for the neurons in our new proposed model (CNNEx).

| Code | Paper |

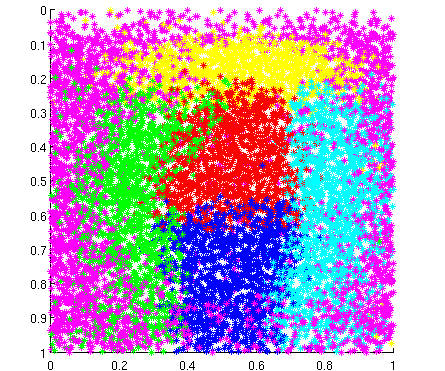

Bayesian Object Recognition

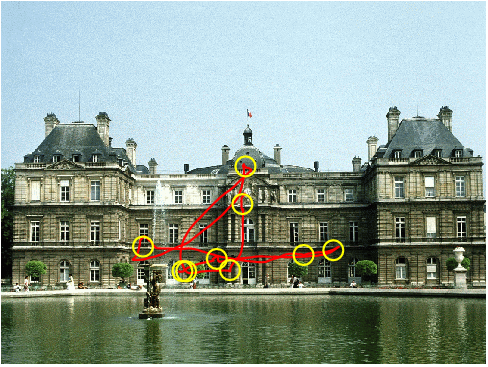

During visual perception of complex objects, humans shift their gaze to different regions of a particular object in order to gain more information about that object. We propose a parts-based, Bayesian framework for integrating information across receptive fields and fixation locations in order to recognize objects.

| Report |

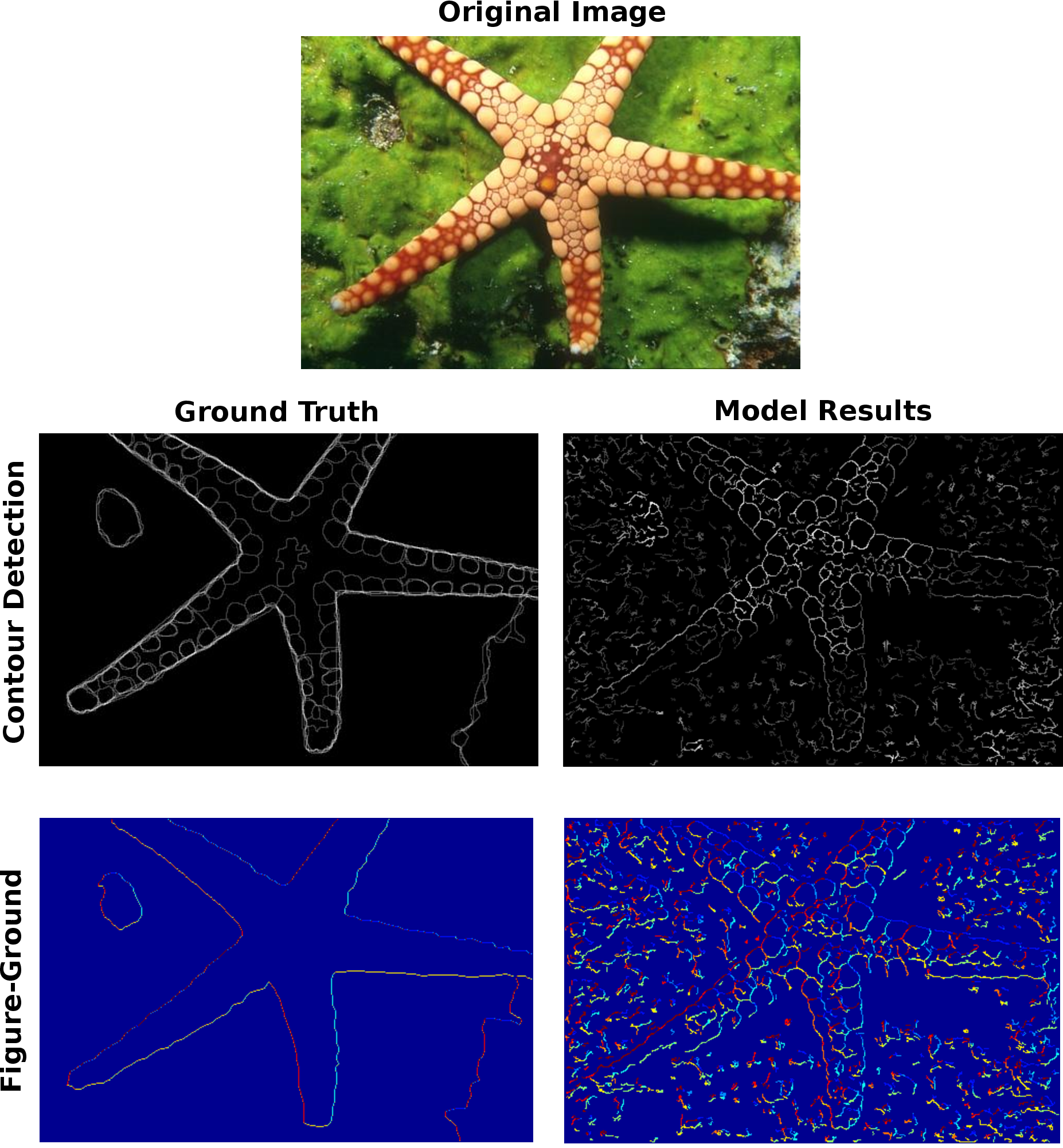

Figure-Ground Segmentation in Natural Images

Figure-ground segmentation is essential for understanding natural scenes. However, the neural mechanisms of this process remain unclear. We propose a fully image computable model that performs both contour detection and figure-ground segmentation on natural images. We compare our model results to the responses of border-ownership selective neurons and also evaluate our model using the Berkeley Segmentation Dataset (BSDS-300).

| Code | Paper |

Contour Integration and Border-Ownership Assignment

We propose a recurrent neural model that explains several sets of neurophysiological results related to contour integration and border ownership assignment using the same network parameters. Our model also provides testable predictions about the role of feedback and attention in different visual areas.

| Code | Paper |

Head Movements in Virtual Reality

Natural visual exploration makes use of both head movements and eye movements. Here, we study the role of head movements when viewing large-scale, immersive images in virtual reality. We quantify head movement kinematics under these conditions and show that head movements follow a stereotyped pattern.

| Code/Data | Paper | Paper |

3D Visual Saliency

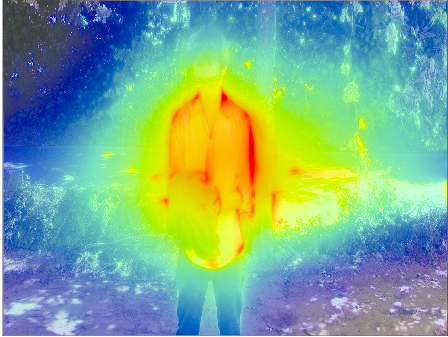

We extend a model of proto-object based saliency to include depth information. Our results show that the added depth information provides a small, but statistically significant improvement in gaze prediction on three separate eye tracking datasets.

| Code | Paper |

3D Surface Representation

How the brain represents 3D surfaces and directs attention to these surfaces is not well known. We propose a neural model that groups together disparity-selective neurons that belong to a surface. Our model is also able to reproduce several key psychophysical results, including the spread of attention across surfaces.

| Code | Paper |

I can also be found on Google Scholar.