In this day and age, information (and misinformation) is becoming overly abundant. Research in neuroscience and machine learning is rapidly accelerating, and sometimes it seems impossible to keep up with all the latest advances. Reading papers is a bit of an art, so I wanted to share some guidelines for how I’ve learned to do this consistently and effectively.

-

Reading papers takes time and practice. Just like normal exercise, recognize that reading papers is a skill that takes time and practice to develop. Set aside time each week to read papers and consider this to be an integral part of your work. When I first started out as a graduate student, each paper I came across seemed novel to me and reading a single paper could take me several hours. However, I stuck with it and by the end of my PhD, I could skim through a paper and evaluate whether it was worth my time to read more in-depth. Over time, I also learned the lay of the field, and found how to connect the ideas I was reading about in papers to my own research.

-

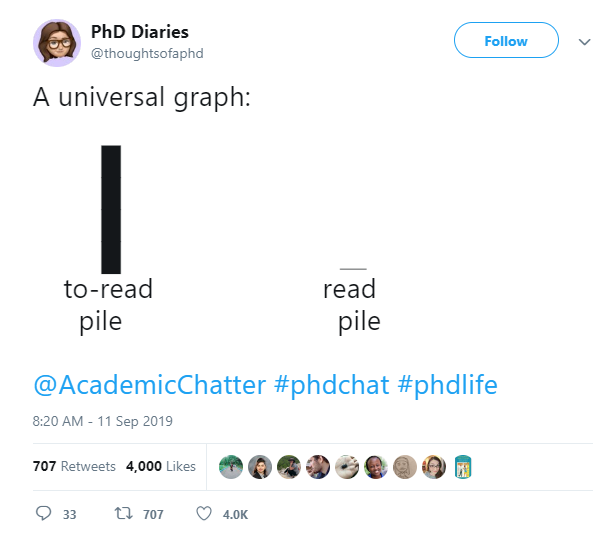

Triage papers. Inevitably, you will have a stack of papers that you want to read and not enough time to read them all. I have found that first performing a triage step is important. Given a stack of papers, I will first read the title and author list of each paper. I end up triaging papers with titles which are not closely related to my current research or too far off in an unrelated field. Although not universally true, papers with author lists that come from more well-established labs usually have greater credibility, and they can also be interesting to read if you have been following that lab’s research trajectory. Using social media as an extra pre-filtering step can also be helpful– paper which have gained traction online are usually interesting enough for people to share and discuss.

-

Get a gestalt view. Given this short list of papers, I will quickly skim through each paper to get a gestalt view of the paper. I typically spend 5-10 minutes on each paper. I try to understand the motivation of the paper by glancing through the references which are cited in the introduction. I will then flip through the figures, which should tell something of a story through a clear organization and logical flow. Well-written papers can distill the main message of the paper into figures, such that you can understand the paper just by looking at the figures. Finally, I will often read the discussion section of the paper in more detail, as it should contain a recap of the main results of the paper and place the research in the larger context of the field. During this first pass, I tend to not focus too much on methods (as a personal preference), as these should not be essential for understanding the paper unless the paper is a pure methods paper.

-

Understanding comes from explaining. Albert Einstein once said, “If you can’t explain it simply, you don’t understand it well enough.” Try to take advantage of opportunities in journal clubs to present papers. This will force you to read the paper closely enough to explain it to an audience who may or may have not read the paper. This step requires the most amount of work, and you may have to read a paper multiple times before truly understanding it. I often find that reading a hard copy of the paper is helpful, so that I can highlight and write down notes on the paper itself. By the end of graduate school, I had a huge stack of these hand-annotated papers, which tracked the evolution of my thinking as a researcher.

-

Check if open-source code exists. Many papers introduce a new dataset or model implementation, so check if these are publicly available. This fosters greater reproducibility (so kudos to the original authors!), and also allows you to get a head start on any ideas for extensions (without having to re-build everything from scratch yourself). Many times, looking at the source code can also help you get a better sense of the methods of the paper, where some things may not be specified exactly.

Happy reading!

P.S. Here are some good resources for the latest papers and news in neuroscience and machine learning:

Feeds

Interesting (Computational) Neuroscience Papers

Simons Foundation Global Brain News

Hacker News

Blogs

Google AI Blog

OpenAI Blog

Facebook AI Blog

Fast AI Blog

BAIR Blog

Uber Engineering Blog

Rezah Zadeh

Konrad Kording

Subutai Ahmad

Jeremy Howard

Filip Piekniewski

Pytorch