In this video, John Launchbury of DARPA talks about the three waves of A.I., or artificial intelligence. I found the video helpful in putting the field of A.I. in historical context, while also providing a roadmap for the future of A.I.

The three waves are:

-

Handcrafted Knowledge

Building in logical reasoning through implementation of a set of rules. This allows for reasoning over narrowly defined problems, but has no learning capability and poor handling of uncertainty.Examples: chess-playing programs, TurboTax

Challenges: early self-driving cars -

Statistical Learning

Good at perceiving and learning about the natural world, but has minimal abstraction or reasoning abilities. Can perform nuanced classification and prediction, but has no contextual capability.Examples: neural nets, deep learning, many diverse real-world applications

Challenges: adversarial examples, requires vast amounts of training data -

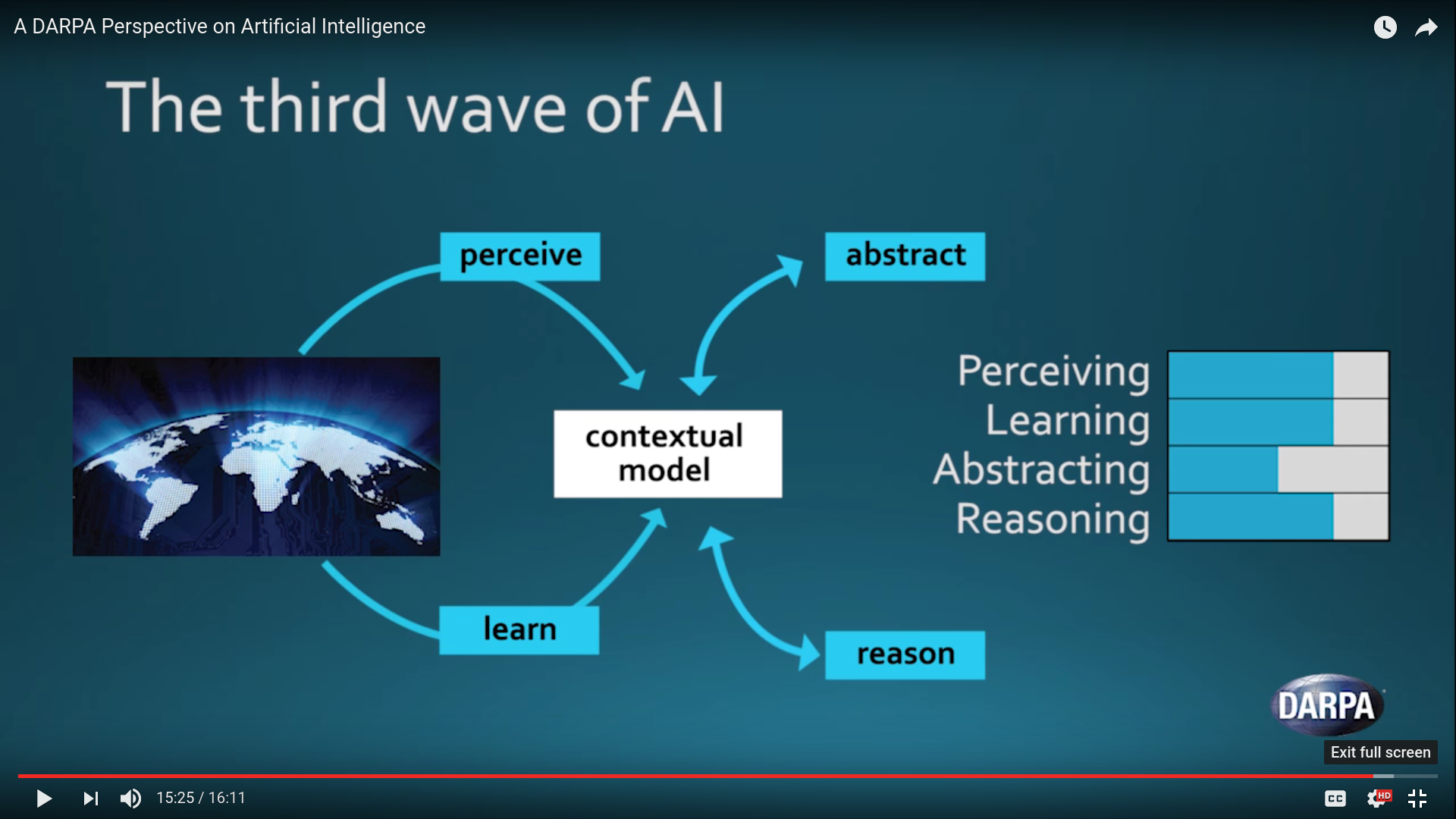

Contextual Adaptation

Learning is used to create a contextual model over time, the world is perceived in terms of this model, which then drives reasoning and abstraction. When interrogated, the model allows us to understand how a decision was made.Examples: one-shot learning, generative models for handwriting

Challenges: requires combining the first two waves, still in early stages

Most of my research has focused on handcrafted knowledge, essentially the art of finding useful features that can explain perceptual phenomena based on first principles. I’m starting to delve more into statistical learning, mainly understanding why deep learning works so well for a variety of different problems. I firmly believe that we will need contextual models, as they are a step closer to the way that we as humans learn and reason. What do you think about these three waves of A.I.? Will the current hype about deep learning die down or is it here to stay?